NLopt Tutorial

From AbInitio

| Revision as of 22:11, 13 November 2008 (edit) Stevenj (Talk | contribs) (→Example nonlinearly constrained problem) ← Previous diff |

Revision as of 22:45, 13 November 2008 (edit) Stevenj (Talk | contribs) Next diff → |

||

| Line 13: | Line 13: | ||

| (This problem is especially trivial, because by formulating it in terms of the cube root of ''x''<sub>2</sub> you can turn it into a linear-programming problem, but we won't do that here.) | (This problem is especially trivial, because by formulating it in terms of the cube root of ''x''<sub>2</sub> you can turn it into a linear-programming problem, but we won't do that here.) | ||

| + | |||

| + | ==Example in C/C++== | ||

| + | To implement the above example in C or C++, we would first do: | ||

| + | |||

| + | #include <math.h> | ||

| + | #include <nlopt.h> | ||

| + | |||

| + | to include the NLopt header file as well as the standard math header file (needed for things like the <code>sqrt</code> function and the <code>HUGE_VAL</code> constant), then we would define our objective function as: | ||

| + | |||

| + | double myfunc(int n, const double *x, double *grad, void *my_func_data) | ||

| + | { | ||

| + | if (grad) { | ||

| + | grad[0] = 0.0; | ||

| + | grad[1] = -0.5 / sqrt(x[1]); | ||

| + | } | ||

| + | return sqrt(x[1]); | ||

| + | } | ||

| + | |||

| + | There are several things to notice here. First, since this is C, our indices are zero-based, so we have <code>x[0]</code> and <code>x[1]</code> instead of ''x''<sub>1</sub> and ''x''<sub>2</sub>. The return value of our function is the objective <math>\sqrt{x_2}</math>. Also, if the parameter <code>grad</code> is not <code>NULL</code>, then we set <code>grad[0]</code> and <code>grad[1]</code> to the partial derivatives of our objective with respect to <code>x[0]</code> and <code>x[1]</code>. The gradient is only needed for [[NLopt Introduction#Gradient-based versus derivative-free algorithms|gradient-based algorithms]]; if you use a derivative-free optimization algorithm, <code>grad</code> will always be <code>NULL</code> and you need never compute any derivatives. Finally, we have an extra parameter <code>my_func_data</code> that can be used to pass additional data to <code>myfunc</code>, but no additional data is needed here so that parameter is unused. | ||

| + | |||

| + | For the constraints, on the other hand, we ''will'' have additional data. Each constraint is parameterized by two numbers ''a'' and ''b'', so we will declare a data structure to hold this information: | ||

| + | |||

| + | typedef struct { | ||

| + | double a, b; | ||

| + | } my_constraint_data; | ||

| + | |||

| + | Then, we implement our constraint function as follows. (You always create a ''single'' constraint function, even if you have several very different constraints—your constraint function can do different things depending on what is passed as the <code>void *data</code> parameter.) | ||

| + | |||

| + | double myconstraint(int n, const double *x, double *grad, void *data) | ||

| + | { | ||

| + | my_constraint_data *d = (my_constraint_data *) data; | ||

| + | double a = d->a, b = d->b; | ||

| + | if (grad) { | ||

| + | grad[0] = 3 * (a*x[0] + b) * (a*x[0] + b); | ||

| + | grad[1] = -1.0; | ||

| + | } | ||

| + | return ((a*x[0] + b) * (a*x[0] + b) * (a*x[0] + b) - x[1]); | ||

| + | } | ||

| + | |||

| + | The form of the constraint function is the same as that of the objective function. Here, the <code>data</code> parameter will actually be a pointer to <code>my_constraint_data</code> (because this is the type that we will pass to <code>nlopt_minimize_constrained</code> below), so we use a typecast to get the constraint data. NLopt always expects constraints to be of the form <code>myconstraint</code>('''x''') ≤ 0, so we implement the constraint ''x''<sub>2</sub> ≥ (''a'' ''x''<sub>1</sub> + ''b'')<sup>3</sup> as the function (''a'' ''x''<sub>1</sub> + ''b'')<sup>3</sup> − ''x''<sub>2</sub>. Again, we only compute the gradient if <code>grad</code> is non-<code>NULL</code>, which will never occur if we use a derivative-free optimization algorithm. | ||

| + | |||

| + | Finally, we need to call nlopt_minimize_constrained from our program somewhere. We will, of course, need to declare arrays to hold the solution '''x''', the upper and lower bounds, and the constraint data. This will look something like: | ||

| + | |||

| + | my_constraint_data data[2] = { {2,0}, {-1,1} }; | ||

| + | double x[2] = { 1.234, 5.678 }; /* ''some initial guess'' */ | ||

| + | double lb[2] = { -HUGE_VAL, 0 }, ub[2] = { HUGE_VAL, HUGE_VAL }; /* ''lower and upper bounds'' */ | ||

| + | double minf; /* ''the minimum objective value, upon return'' */ | ||

| + | |||

| + | if (nlopt_minimize_constrained(NLOPT_LD_MMA, 2, myfunc, NULL, | ||

| + | 2, myconstraint, data, sizeof(my_constraint_data), | ||

| + | lb, ub, x, &minf, | ||

| + | -HUGE_VAL, 0.0, 0.0, 1e-4, NULL, 0, 0.0) < 0) { | ||

| + | printf("nlopt failed!\n"); | ||

| + | } | ||

| + | else { | ||

| + | printf("found minimum at f(%g,%g) = %g\n", x[0], x[1], minf); | ||

| + | } | ||

| + | |||

| + | The arguments to <code>nlopt_minimize_constrained</code> are described in detail by the [[NLopt Reference|reference manual]]. Most of them are straightforward. Notice that we pass <code>data</code> as the data for <code>myconstraint</code> — when <code>myconstraint</code> is called, it will be passed pointers to <code>data[0]</code> and <code>data[1]</code> for the two constraints. The last set of parameters (<code>-HUGE_VAL, 0.0, 0.0, 1e-4, NULL, 0, 0.0</code>) are [[NLopt Introduction#Termination conditions|termination criteria]] — the only one we are using is that we are setting the relative tolerance on '''x''' to 10<sup>−4</sup>, and the other conditions are set to defaults where they do nothing. | ||

| + | |||

| + | <code>nlopt_minimize_constrained</code> will return a negative result code on failure, but this usually only happens if you pass invalid parameters, it runs out of memory, or something like that. Otherwise, we print out the minimum function value and the corresponding parameters '''x'''. | ||

Revision as of 22:45, 13 November 2008

In this tutorial, we illustrate the usage of NLopt in various languages via one or two trivial examples.

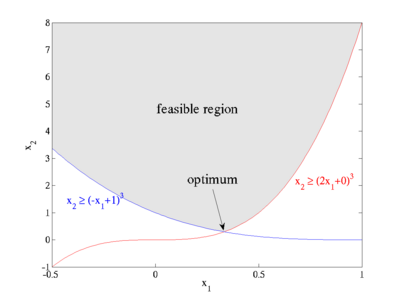

Example nonlinearly constrained problem

As a first example, we'll look at the nonlinearly constrained minimization problem:

- subject to

,

,  , and

, and

for parameters a1=2, b1=0, a2=-1, b2=1.

The feasible region defined by these constraints is plotted at right: x2 is constrained to lie at the maximum of two cubics, and the optimum point is located at the intersection (1/3, 8/27) where the objective function takes on the value  .

.

(This problem is especially trivial, because by formulating it in terms of the cube root of x2 you can turn it into a linear-programming problem, but we won't do that here.)

Example in C/C++

To implement the above example in C or C++, we would first do:

#include <math.h> #include <nlopt.h>

to include the NLopt header file as well as the standard math header file (needed for things like the sqrt function and the HUGE_VAL constant), then we would define our objective function as:

double myfunc(int n, const double *x, double *grad, void *my_func_data)

{

if (grad) {

grad[0] = 0.0;

grad[1] = -0.5 / sqrt(x[1]);

}

return sqrt(x[1]);

}

There are several things to notice here. First, since this is C, our indices are zero-based, so we have x[0] and x[1] instead of x1 and x2. The return value of our function is the objective  . Also, if the parameter

. Also, if the parameter grad is not NULL, then we set grad[0] and grad[1] to the partial derivatives of our objective with respect to x[0] and x[1]. The gradient is only needed for gradient-based algorithms; if you use a derivative-free optimization algorithm, grad will always be NULL and you need never compute any derivatives. Finally, we have an extra parameter my_func_data that can be used to pass additional data to myfunc, but no additional data is needed here so that parameter is unused.

For the constraints, on the other hand, we will have additional data. Each constraint is parameterized by two numbers a and b, so we will declare a data structure to hold this information:

typedef struct {

double a, b;

} my_constraint_data;

Then, we implement our constraint function as follows. (You always create a single constraint function, even if you have several very different constraints—your constraint function can do different things depending on what is passed as the void *data parameter.)

double myconstraint(int n, const double *x, double *grad, void *data)

{

my_constraint_data *d = (my_constraint_data *) data;

double a = d->a, b = d->b;

if (grad) {

grad[0] = 3 * (a*x[0] + b) * (a*x[0] + b);

grad[1] = -1.0;

}

return ((a*x[0] + b) * (a*x[0] + b) * (a*x[0] + b) - x[1]);

}

The form of the constraint function is the same as that of the objective function. Here, the data parameter will actually be a pointer to my_constraint_data (because this is the type that we will pass to nlopt_minimize_constrained below), so we use a typecast to get the constraint data. NLopt always expects constraints to be of the form myconstraint(x) ≤ 0, so we implement the constraint x2 ≥ (a x1 + b)3 as the function (a x1 + b)3 − x2. Again, we only compute the gradient if grad is non-NULL, which will never occur if we use a derivative-free optimization algorithm.

Finally, we need to call nlopt_minimize_constrained from our program somewhere. We will, of course, need to declare arrays to hold the solution x, the upper and lower bounds, and the constraint data. This will look something like:

my_constraint_data data[2] = { {2,0}, {-1,1} };

double x[2] = { 1.234, 5.678 }; /* some initial guess */

double lb[2] = { -HUGE_VAL, 0 }, ub[2] = { HUGE_VAL, HUGE_VAL }; /* lower and upper bounds */

double minf; /* the minimum objective value, upon return */

if (nlopt_minimize_constrained(NLOPT_LD_MMA, 2, myfunc, NULL,

2, myconstraint, data, sizeof(my_constraint_data),

lb, ub, x, &minf,

-HUGE_VAL, 0.0, 0.0, 1e-4, NULL, 0, 0.0) < 0) {

printf("nlopt failed!\n");

}

else {

printf("found minimum at f(%g,%g) = %g\n", x[0], x[1], minf);

}

The arguments to nlopt_minimize_constrained are described in detail by the reference manual. Most of them are straightforward. Notice that we pass data as the data for myconstraint — when myconstraint is called, it will be passed pointers to data[0] and data[1] for the two constraints. The last set of parameters (-HUGE_VAL, 0.0, 0.0, 1e-4, NULL, 0, 0.0) are termination criteria — the only one we are using is that we are setting the relative tolerance on x to 10−4, and the other conditions are set to defaults where they do nothing.

nlopt_minimize_constrained will return a negative result code on failure, but this usually only happens if you pass invalid parameters, it runs out of memory, or something like that. Otherwise, we print out the minimum function value and the corresponding parameters x.